Real-Time Operating Systems (RTOS) are susceptible to system failure due to challenges like data/time collisions and race conditions. Unlike large desktop or server systems, such as Windows or Linux, where processes stay in their separate address spaces, RTOS threads all run together in the same address space. These threads often share resources like variables, functions, and hardware peripherals. Without mutual exclusion mechanisms, simultaneous access to and modification of shared resources by different threads can result in data inconsistency and corruption. These issues not only jeopardize the reliability of the real-time system but can also lead to catastrophic failures, especially in safety-critical applications where timely and predictable execution is paramount.

Table of Content

ToggleRace Conditions

One of the most common issues with data sharing is race conditions. These occur when two or more pieces of code, running asynchronously, attempt to access and modify a shared resource simultaneously. Challenges commonly arise when at least one piece of code executes a read-modify-write sequence on a shared resource. If an asynchronous preemption happens between the read and write operations, the resource’s state might change, but the preempted piece of code may not capture it. This creates a window of vulnerability, leading to potential data inconsistencies, corruption, and even system instability. Race conditions pose a significant concern, particularly between any two concurrent threads in a preemptive RTOS kernel.

Mutual Exclusion In RTOS

The concept of mutual exclusion is pivotal in real-time systems, and its implementation is a responsibility bestowed upon application developers rather than the RTOS itself. Shared resources within threads demand careful consideration to prevent concurrency hazards, necessitating the enforcement of mutual exclusion—ensuring only one concurrent thread or interrupt can access any given resource at a time. While this places a significant burden on application developers, the good news is that Real-Time Operating Systems (RTOS) offer an array of mutual exclusion mechanisms.

Critical Section

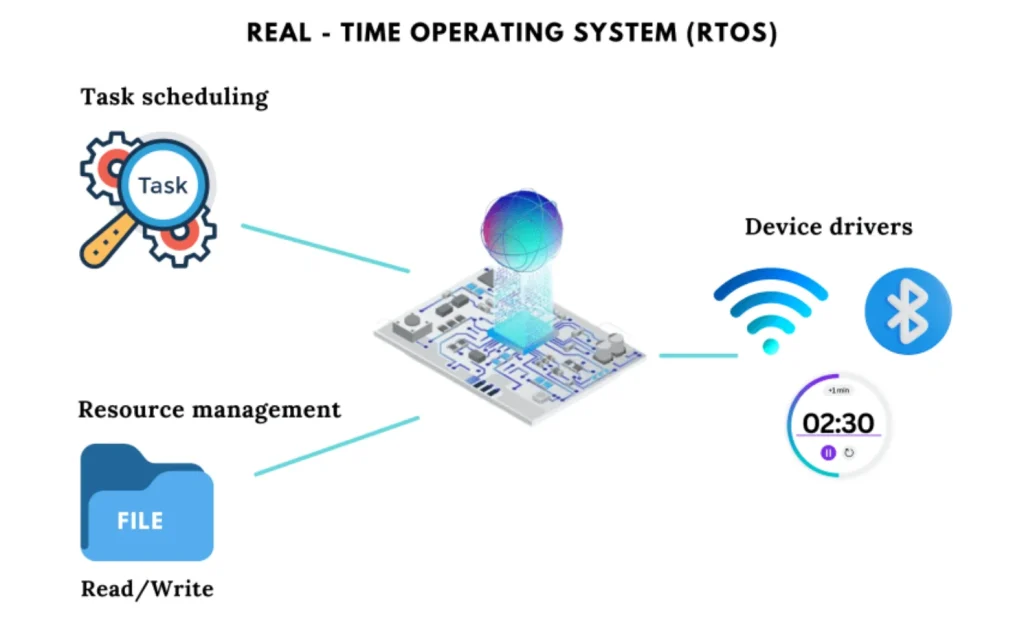

One way to dodge those race conditions is to simply disable all Interrupts around the code that touches the shared resource. It’s like putting a temporary halt on the processor’s external communication to prevent preemption. However, this straightforward use of unconditional interrupt disabling and enabling within the critical section has couple of drawbacks.

First off, it’s inconsistent with the interrupt disabling policy of most Real-Time Operating Systems (RTOS). The basic critical section goes all-in, disabling all Interrupts without discrimination, causing extra delays for interrupts that should always stay active. And then, there’s the issue of nesting critical sections. Imagine hiding a critical section inside a function called from an already established critical section. If your critical section isn’t designed to handle nesting, you might turn interrupts back on too soon, risking a race condition in the code that should be shielded.

To address this, modern RTOS kernels typically implement more refined mechanisms. These mechanisms selectively disable only necessary interrupts and allow for nested critical sections. The design often includes the use of automatic variables or data structures to remember interrupt disable status, along with macros or functions for entering and exiting critical sections.

Semaphores

While critical sections are effective for short, fast-executing code segments, they may fall short in managing longer sections of code due to potential increases in interrupt latency. To address this limitation, semaphores provide a more versatile mechanism for mutual exclusion in real-time systems. Semaphores are synchronization mechanisms used in concurrent programming to control access to shared resources. They work by maintaining a counter, often called the semaphore count, and provide two fundamental operations: wait and signal.

-

WAIT OPERATION:

When a task wants to access a shared resource, it executes the wait operation on the semaphore associated with that resource. If the semaphore count is greater than zero, the task decrements the count and proceeds. If the count is zero, the task is blocked or forced to wait until the semaphore count becomes greater than zero.

-

SIGNAL OPERATION:

When a task finish using the shared resource, it executes the signal operation on the semaphore. This operation increments the semaphore count. If there are tasks waiting due to a previous wait operation, one of them is unblocked and allowed to proceed.

Unbounded Priority Inversion

Following the discussion on semaphores, it is crucial to recognize a potential challenge known as “unbounded priority inversion” in real-time systems. Picture a scenario where a high-priority task seeks access to a shared resource guarded by a semaphore, but the semaphore is currently held by a lower-priority task. The low-priority task, while holding the resource, may need to wait for another resource, or some other medium-priority tasks can preempt it, resulting in its being blocked. Now when the system clock ticks and unblocks the highest-priority task, it is immediately blocked on the semaphore, as the semaphore was already taken by the low-priority task, which is also blocked by another task. This results in the highest-priority task missing its hard real-time deadline.

![High priority task [HP] Medium priority task [MP] Low priority task [LP]](http://oxeltech.de/wp-content/uploads/2024/02/3-1.png)

This problem arises because classic semaphores, while effective in synchronizing threads, lack awareness of thread priorities. In the absence of thread priorities, priority inversion was not a concern in the timesharing systems when semaphores were first introduced. However, in modern priority-based real-time operating systems, classic semaphores may inadvertently lead to priority inversion.

So, classic semaphores, while effective in synchronizing threads, are not suitable for mutual exclusion in priority-based systems. Which brings us to the two modern mutual exclusion mechanisms. Both of them prevent priority inversion as well as many other tricky problems, such as deadlock.

Selective Scheduler Locking

In this method, the function takes a crucial parameter—the priority ceiling. This ceiling serves as the threshold for locking the scheduler, signifying that any threads at or below the specified priority won’t be scheduled. Conversely, threads with priorities above the ceiling remain scheduled as usual. By setting the priority ceiling, any threads at or below this specified priority level won’t be scheduled, ensuring that they do not interfere with the critical section protected by the lock.

It’s worth noting that ISRs, positioned above all threads, remain entirely unaffected, ensuring no impact on interrupt latency. Selective scheduler locking operates as a non-blocking mutual exclusion mechanism, similar to the non-blocking nature of a critical section. The distinction lies in its prevention of scheduling for threads up to the specified priority ceiling, allowing higher-priority threads and all Interrupts to proceed without disruption.

However, a limitation of selective scheduler locking is that the thread holding the lock cannot block. Engaging in blocking actions while accessing a shared resource, such as using blocking-delay or semaphore-wait APIs, is discouraged as a best practice.

Mutexes

This brings us to the final and most sophisticated mutual exclusion mechanism mutex. Short for MUTual-EXclusion, the term “mutex” is sometimes referred to as a mutual exclusion semaphore. This RTOS object is specifically designed to safeguard resources shared among concurrent threads, addressing scenarios where threads may block while accessing the shared resource.

To prevent the unbounded priority inversion two widely recognized protocols that mutex use are Priority Ceiling and Priority Inheritance.

- Under the Priority Ceiling Protocol, the low-priority task holding the mutex is elevation to the ceiling priority of the mutex. The priority ceiling must be higher than the highest priority of all tasks that can access the resource, thereby ensuring that a task owning a shared resource remains immune to preemption by any other task attempting to access the same resource. Once the task that acquired the resource releases it, it reverts to its initial priority level.

![Mutex Ceiling priority High priority task [HP] Medium priority task [MP] Low priority task [LP]](http://oxeltech.de/wp-content/uploads/2024/02/4-1.png)

- Meanwhile, the Priority Inheritance Protocol dynamically adjusts task priorities based on mutex ownership. When a low-priority task acquires a shared resource, the task continues running at its original priority level. If a high-priority task requests ownership of the shared resource, the low-priority task is elevated to the priority of the requesting high-priority task. The low-priority task can then proceed with executing its critical section until it releases the resource. Once the resource is released, the task is reverted back to its original low-priority level, allowing the high-priority task to utilize the resource.

![High priority task [HP] Medium priority task [MP] Low priority task [LP]](http://oxeltech.de/wp-content/uploads/2024/02/5-1.png)

Conclusion

In essence, while these mechanisms offer protection, each comes with its own set of trade-offs, demanding careful consideration in real-time system design. The choices made in managing shared resources directly impact the system’s real-time performance, emphasizing the critical balance between mutual exclusion and system responsiveness.